The Data Quality Playbook: How Leading Enterprises Avoid Bad Data—and Bad Decisions

“Wait…that’s not right.” Have you ever found yourself questioning the data you just presented to leadership? You’re not alone. In B2B, data quality issues are more common and costly than you think.

“People have gotten really smart taking surveys, especially since the pandemic. Getting through a screener is pretty easy,” said Steven Rencher, senior research manager at accounting software company BILL.

“I’ve noticed some vendors lead the question to get people past the screener because they don’t care. To them, a complete is a complete, whether or not it meets your parameters.”

Learn how to trust your data—from the best

Measuring data quality is trickier than ever. That’s why we turned to our community of research and insights leaders at the world’s strongest, fastest-growing companies to build your playbook for B2B research quality. We wanted to know—how do industry giants like Google, Fidelity, PNC, Verizon, LinkedIn, and Visa evaluate data quality? How do they vet vendors, weed out bad actors, and keep up when their colleagues are clamoring for insights?

Our methodology for this data quality playbook

We interviewed over 40 research and insights leaders in the financial service and technology industries. We asked them questions like:

- What are your top pain points in conducting research today?

- How do you measure data quality and test for fraudulent respondents?

- How do you work with research partners to ensure you can trust the insights?

We captured and coded their responses to identify two distinct approaches to data quality management embodied by two archetypes: the Outsourcing Specialist and the Data Expert.

Which type of leader are you? The Outsourcing Specialist vs. the Data Expert

Are you a hands-on Data Expert or a strategic and streamlined Outsourcing Specialist? Understanding your research style can help you strengthen data quality while building efficient, transparent practices.

Archetype 1: The Outsourcing Specialist—The Orchestrator

Outsourcing Specialists are the maestros of delegation. They partner with external vendors to manage the entire research lifecycle, from recruitment to analysis. Their strength lies in leveraging external expertise to scale research efforts.

Workflow.

Outsourcing Specialists cultivate a select group of trusted vendors, often relying heavily on one or two for full-service research execution. Their primary focus is on research objectives and the narrative of the business findings and implications. Still, it can leave a blind spot: limited visibility into the panel providers used for sample recruitment. This reliance can create a vulnerability: vendors might utilize unnamed third-party sample providers without thorough vetting.

Pain points & approach to data quality.

What is a good slide presentation worth if it’s based on data you can’t trust? “I don’t know which sample providers our agency uses, but we pay a pretty high premium,” shared an insights leader at Visa.

“Maybe this is my naive perspective on it, but we’ve been almost solely reliant on the vendor doing the validation work. Maybe I should ask more questions.”

The primary challenge is dangerously limited visibility into data quality. Many fully trust vendors, assuming they’ve put the proper guardrails in place to verify respondents and remove low-quality answer patterns, such as straightlining, speeding, and inconsistencies.

“We trust our vendors, so data quality isn’t always top-of-mind,” said an insights manager at Mastercard. “We appreciate transparency, but it’s not something we actively manage on a daily basis.” Many Specialists also operate in high-pressure environments of “I needed insights yesterday.” Finding time to independently evaluate their data quality isn’t feasible.

Agencies, on the other hand, are often incentivized to use lower-cost sample vendors to improve their margins. Why bother paying a premium to reduce their margin if the enterprise client doesn’t even look into or question the data?

However, the implications are significant for those investing in the research. Unverified panel providers often send generic survey links to large distribution lists. These links allow panelists to self-identify as anyone, meaning there is no way to verify the validity or professional identity of respondents.

You’re walking on thin ice if you’re using that data to craft C-level recommendations with multi-million budget implications.

Outsourcing specialists: 5 best practices for better data quality in B2B research.

Strong B2B research starts with strong data. These five best practices will help Outsourcing Specialists ensure quality at every step.

- Get involved in sample vendor selection. You can ask your agency to disclose sources or start building direct relationships with sample vendors. Ask the agency to run A/B tests between sample vendors to test quality and discuss budget implications. Less sample but verified respondents can be a lot more valuable than drawing conclusions from a large number of pretenders.

- Cross-reference a vendor’s results with your own. To evaluate an agency’s sample quality, compare one of their data sets from an external sample to surveys conducted directly with your verified customers. Some differences are typical; huge variances are not.

- Run a quick reality check. Choose two or three questions from the survey, such as unaided awareness or consideration, and use your intuition to determine whether the patterns align with real market dynamics. For open-ended questions, do the responses reflect real subject matter expertise?

- Ask your agency to report on data quality KPIs. Requestable metrics include quality removal, fraud, and incidence rates. The NewtonX seven KPI data quality framework provides more information.

- Invest in verified samples for hard-to-reach audiences. Identify which audiences pose the most significant challenge in finding or capturing high-quality responses. Invest in verified samples to reduce your risk of low-quality data.

Archetype 2: The Data Expert—The Craftsperson

Data Experts are meticulous artisans of research. They adopt a hands-on approach and desire visibility into every process stage. Their strength lies in their deep understanding of data and commitment to rigorous quality control.

Workflow.

Data Experts are deeply involved in every project phase, from design to analysis. They prioritize comprehensive data access and integrate quality control directly into their research processes. This approach ensures meticulous oversight but can limit the number of projects they can manage simultaneously.

Data Experts have agency partners for end-to-end work, but it’s not their default approach, given the budget implications. They often keep services in-house and partner directly with sample providers if they have the internal resources and subject matter expertise.

Pain points & approach to data quality.

The Data Expert feels the tension between ensuring quality and delivering strategic, timely insights. This archetype may sense when data can’t be fully trusted, but they don’t have a structured way to measure data quality or the bandwidth to build one. Data Experts already have a full workload, managing research end-to-end with limited agency support.

Data Experts understand the difference in data quality between unverified panels and custom-recruited premium sample, but they struggle to make a case internally for higher budgets. “I would love to use verified sample for all my research, but it’s simply not feasible with budgets. That’s why I use NewtonX whenever I can’t afford mistakes and default to panels when it’s less critical, but I don’t feel good about the quality,” said a research leader at a FinTech company.

Using unverified panel sample creates risks from wrong business insights and can drain resources. “I want to replace panel with premium sample, but how do I make a case for budget and explain to my boss that the data we used the last three years can’t be trusted? I am spending hours and hours cleaning panel data to make it work, but I still don’t trust it,” said an insight manager at Intuit.

Data Experts define quality through personal control. They value hands-on validation and rigorous quality checks, often analyzing data line-by-line.

A VP of research at Meta summarized her approach this way:

“You get the sample, you understand who that person is, and then you can link back to their LinkedIn page to determine whether or not this person is currently in the role and is functioning in the capacity they self-report as being.”

Investigating each respondent this way isn’t scalable, which is why Data Experts often focus on cleaning data from obvious bad actors who speed, straightline, fail red herrings, or show bot-like patterns. This process can be very time-consuming with panel data and still leaves the most critical question unanswered: Are respondents who they say they are?

Data experts: 5 best practices for better data quality in B2B research.

For Data Experts, quality is everything—but so is efficiency. These five best practices will help you maintain rigorous standards and keep insights rock-solid, all without slowing you down.

- Request automated removals during the survey workflow. Partner with your vendor to ensure respondents who fail quality checks—for instance, straight-lining, speeding, or AI copy/pasting—get tossed out immediately to save time on data cleaning.

- Always measure data quality KPIs across audiences and sample vendors. This will reduce the risk of wasting resources on worthless data. Partner with vendors that know exactly who each respondent is and can append respondent data or set up a follow-up call with individuals whenever there are open questions.

- Set up A/B tests that identify the pretenders. Fraudsters got clever at passing basic bad actor survey checks and screener questions. With A/B tests, you can flag people taking on false identities based on expected answer patterns from true subject experts.

- Partner with plug-and-play sample vendors that can provide quick-turn services to fill resource gaps. Internal resourcing and priorities can change quickly. For instance, if a new, urgent project pops up, and you’re resource-constrained on survey design, they can jump in immediately—and you can keep the analysis in-house.

- Try innovating to study hard-to-reach audiences. New technologies such as synthetic data can help you get high-quality, feasible data from these audiences without spending a fortune.

Case study: Identifying pretenders with an A/B test

Verifying respondents’ identities and expertise is essential to data quality. A simple A/B test between providers—best practice #3 for Data Experts—can help identify which data partners prioritize doing so and which don’t.

Let’s consider a business intelligence (BI) software case study. Tableau and Microsoft Power BI are the clear leaders with powerful, innovative solutions. SAP and Oracle are strong players in enterprise resource planning (ERP), but their BI solutions lag in functionality and market penetration.

Now, imagine you manage insights for a BI company, and you just spent millions on a brand and product strategy. The results suggest your biggest competitors are Oracle and SAP, the laggards in BI. But, in reality, your biggest competition is Tableau. Not fun, is it?

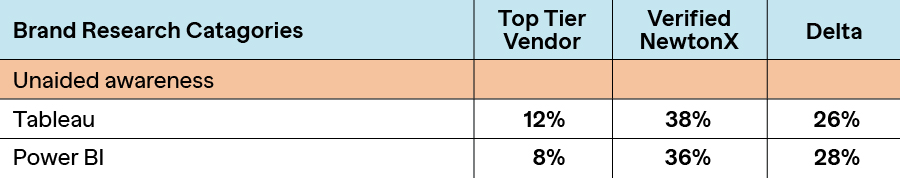

A/B testing samples from your data partners could have revealed the truth. We ran a large-scale A/B test, surveying over 1,000 BI software decision-makers, and found data differences of up to 40% between verified and unverified sample sources. This can have substantial business implications.

Unaided awareness for Tableau is extremely low, with a top-tier vendor at 12%. This indicates that a large portion of the sample may not be in the BI space, while the verified sample is very much in line with market penetration at 38%.

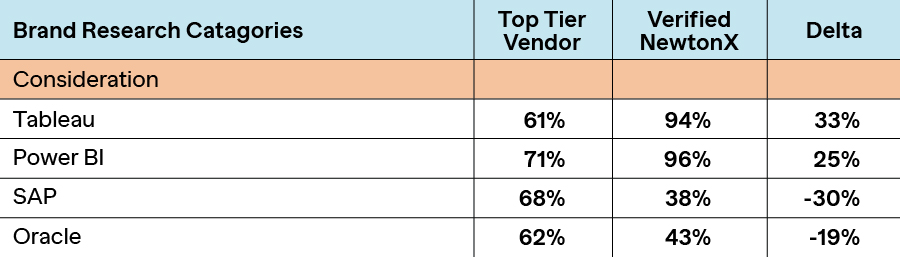

Similar patterns emerge when looking at Consideration scores. Respondents from the top-tier vendor have fallen for a halo effect. In other words, they gave high scores to Oracle and SAP, likely due to broad brand recognition, and they unwittingly applied strength in one area (ERP) to strength in another (BI).

Most BI decision-makers who genuinely know the space would have remarkably differentiated scores between the actual leading players—Tableau and Power BI—and the laggards Oracle and SAP. This difference is reflected in the verified sample, where each respondent has been verified as someone with true knowledge of the BI space.

After running similar tests, a research director at Google changed how they operate: “It wasn’t easy to push through internally, but I stopped using unverified sample. There is no way I will risk my reputation using that data.”

Relying on fraudulent respondents to inform business decisions should not be an option. Data quality can be measured. Challenge your sample vendors and design similar A/B tests to see for yourself.

The new standard: Measure data quality with precision

To elevate B2B research, both archetypes need a rigorous, measurable framework that prioritizes premium sample and partners with you in protecting your data’s quality. The NewtonX seven KPI data quality framework can help you set the standard and hold every agency and sample vendor accountable.

When data quality matters, NewtonX delivers. Put our framework to the test, and you’ll see firsthand how better data leads to better decisions. Try us for your next project and experience the benefits of having a true partner in data integrity.

Sign up for our newsletter, NewtonX Insights:

Related Content

[Webinar Recap] Is B2B ready for synthetic sample? Yes – if you know how to augment it

Listen as Leon Mishkis and Jason Talwar discuss the differences between synthetic and augmented data and a framework for boosting synthetic samples.

read moreNext on the C-Suite Agenda: AI Trust

Hosted by Jackie Cutrone, CMO of NewtonX, this conversation with Jacqueline Woods, CMO of Teradata, delves into strategies for fostering trust in AI and integrating Trusted AI across organizations. Featuring findings from Teradata and NewtonX’s

read more